A graphics processing unit (GPU) is a type of computer processor that executes calculations quickly in order to produce graphics and images. Both business and consumer computers utilize GPUs. Despite their expanded use cases, GPUs are still typically used for rendering 2D and 3D pictures, animations, and videos.

The central processing unit (CPU) handled these calculations in the early days of computing. Yet, when graphics-intensive programs proliferated, the CPU’s workload increased, and performance suffered as a result. In order to free up CPU resources for other tasks and enhance 3D graphics rendering, GPUs were created. GPUs operate utilizing a technique known as parallel processing, in which different processors execute independent components of the same operation.

GPUs are well-known for enabling fluid, high-quality graphics rendering in PC (personal computer) games. Also, programmers started adopting GPUs to speed up workloads in fields like artificial intelligence (AI).

GPUs are used for what today?

Because current GPUs are more programmable than they were in the past, graphics chips are now being used for a wider range of jobs than they were originally intended for.

Examples of GPU use cases are as follows:

- GPUs can speed up the rendering of 2D and 3D graphics programs that are used in real-time.

- GPUs have improved video editing and video content development. The parallel processing of a GPU, for instance, can be used by graphic designers and video editors to speed up the rendering of high-definition video and graphics.

- In order to keep up with display technology like 4K and high refresh rates, emphasis has been placed on powerful GPUs. Video game visuals have gotten more computationally complex.

- GPUs are able to speed up machine learning. Workloads like image recognition can be improved using a GPU’s great computing power.

- Deep learning neural networks can be trained on GPUs, which can share CPU workloads. A neural network’s nodes each carry out calculations as part of an analytical model. Programmers finally understood that they could take advantage of far more parallelism than is achievable with ordinary CPUs by using the capability of GPUs to boost the performance of models across a deep learning matrix. Vendors of GPUs have taken note of this and now produce GPUs specifically for deep learning applications.

- Ethereum and other cryptocurrencies have also been mined using GPUs.

How Does GPU Work?

In the same electronic circuit as a CPU, a graphics card, the motherboard of a personal computer, or a server are all possible places to find a GPU. Construction-wise, GPUs and CPUs are fairly comparable. But GPUs are made expressly for carrying out more difficult geometrical and mathematical calculations. To render visuals, certain calculations are necessary. Transistors in GPUs could exceed those in a CPU.

GPUs will use parallel computing, in which different tasks are handled by different processors at the same time. For storing information about the images it processes, a GPU will also have its own RAM (random access memory). Information is stored about every pixel, including its location on the display. The image will be converted from a digital signal to an analog signal using a digital-to-analog converter (DAC) attached to the RAM so that the monitor can display it. Usually, video RAM runs at high speeds.

GPUs will come in two different flavors: integrated and discrete. While discrete GPUs can be put on a separate circuit board, integrated GPUs are integrated into the GPU itself.

Having GPUs fixed in the cloud may be a smart solution for businesses that need a lot of computational capacity, deal with machine learning, or create 3D visualizations. One example of this is Google’s Cloud GPUs, which offer potent GPUs on Google Cloud. The advantages of hosting GPUs in the cloud include the release of local resources and cost, time, and scalability savings. Customers have a variety of GPU types to choose from, and their needs can be met with flexible performance.

GPU & CPU

The designs of GPUs and CPUs are somewhat similar. GPUs are made primarily to render high-resolution graphics and video quickly, whereas CPUs are employed to respond to and process the fundamental instructions that run a computer. In essence, CPUs handle the majority of command interpretation, while GPUs concentrate on rendering graphics.

A GPU is often made for data parallelism and applying the same instruction to numerous data elements (SIMD). A CPU is built to do several operations and multiple tasks simultaneously.

The number of cores is another way that the two are different. The core is essentially the CPU’s internal processor. Although some CPUs contain up to 32 cores, the majority of CPU cores have a number between four and eight. A core may handle multiple tasks, or threads, at once. The number of threads can be substantially larger than the number of cores in some processors due to multithreading capabilities, which allow a single core to process two threads by practically dividing the core into two. Both transcoding and video editing may benefit from this. Two threads (separate instructions) per core are possible for CPUs (the independent processor units). Four to ten threads can be found in each GPU core.

Due to its parallel processing architecture, which enables it to carry out several calculations at once, a GPU renders images more quickly than a CPU. Although multicore processors can do calculations in parallel by integrating many CPUs onto one chip, a single CPU does not have this capability.

A CPU is frequently better suited to tackle simple computing tasks since it has a higher clock speed than a GPU, which enables it to complete calculations more quickly.

Top graphics cards in the market in 2023

Some of the key participants in the GPU market include Nvidia, GeForce, Asus, Gigabyte, etc.

Among the best GPUs and graphics cards in 2023 were:

Check The Latest Prices on Amazon

A person should consider a graphics card’s price, overall value, performance, features, quantity of video memory, and availability before making a purchase. Customers may be drawn to capabilities like ray tracing, support for 4K, and 60 fps or more. In some cases, cost will be a deciding factor because some GPUs can cost twice as much while only providing 10%–15% higher performance.

GPU History

Since the 1970s, when video games first became popular, specialized processors have been available for processing visuals. A video card, a distinct dedicated circuit board, a silicon chip, and the appropriate cooling were initially used to perform 2D, 3D, and occasionally even general-purpose graphics processing (GPGPU) calculations for a computer. GPUs are the term used to describe contemporary graphics cards that have built-in computations for lighting, transformation, and triangle setup features for 3D applications. Higher-end GPUs, which were once uncommon, are now widely used and occasionally built directly into CPUs. Graphics card, display adapter, video adapter, video board, and nearly any combination of the words in these categories are some alternative terms.

In the late 1990s, high-performance enterprise computers started to use graphics processing units. The GeForce 256, the first GPU created especially for home computers, was made available by Nvidia in 1999.

Throughout time, additional resource-intensive jobs unrelated to graphics became more common thanks to the processing capability of GPUs. Early uses of GPU computing included scientific calculations and modeling; by the middle of the 2010s, machine learning and AI software were also powered by GPU computing.

In a virtual desktop infrastructure, Nvidia launched a virtualized GPU in 2012 that offloads graphics processing resources from the server CPU (VDI). Virtualized GPUs aim to solve the issue of poor graphics performance, which has historically been one of the most prominent complaints among users of virtual desktops and applications.

Nvidia GPU

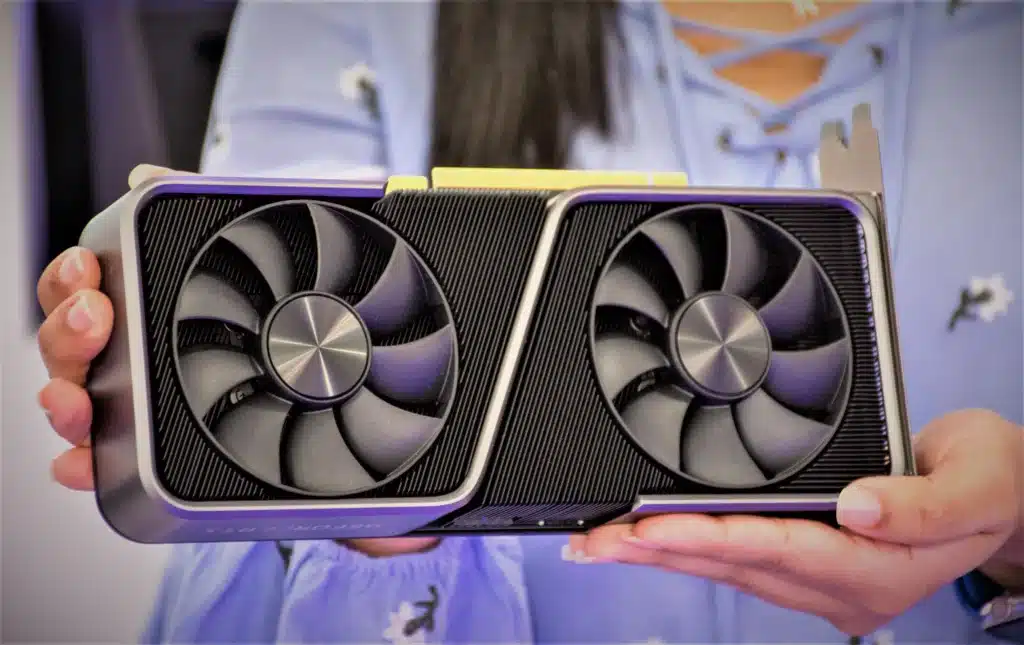

If you are looking for a new graphics card to upgrade your gaming PC, you might want to consider the latest offerings from Nvidia.

Nvidia is one of the leading manufacturers of graphics cards, and they have recently launched their new RTX 30 series, which promises to deliver stunning performance and realistic ray tracing effects.

The RTX 30 series consists of four models: the RTX 3090, the RTX 3080, the RTX 3070, and the RTX 3060 Ti. Each model has different specifications and price points, but they all share some common features, such as:

- The Ampere architecture, which is Nvidia’s second-generation RTX technology, enables faster and more efficient rendering of complex scenes and lighting effects.

- DLSS (Deep Learning Super Sampling) technology, uses artificial intelligence to boost the frame rate and image quality of games without sacrificing resolution or detail.

- The Nvidia Reflex technology reduces the latency between your mouse, keyboard, and monitor, giving you a more responsive and competitive gaming experience.

- The Nvidia Broadcast technology enhances your audio and video quality when streaming or video conferencing, using features such as noise removal, background blur, and auto frame.

The RTX 30 series is compatible with most modern PC games, especially those that support ray tracing and DLSS. Some of the popular titles that you can enjoy with these graphics cards are Cyberpunk 2077, Call of Duty: Black Ops: Cold War, Watch Dogs: Legion, and Fortnite.

If you want to know more about the RTX 30 series and how to choose the best model for your needs and budget, you can visit Nvidia’s official website or look at some of the online ratings and standards. You can also compare the RTX 30 series with other graphics cards from Nvidia or other brands, such as AMD.

Nvidia’s graphics cards are known for their high quality and performance, but they also come with a premium price tag. If you are willing to invest in a new graphics card that will enhance your gaming experience and future-proof your PC for years to come, you might want to consider the RTX 30 series as your next upgrade.

Asus GPU

Asus graphics cards are computer components that enhance the visual performance of your PC. They come in various models and series, such as ROG, TUF, Dual, Phoenix, and more.

Asus graphics cards use NVIDIA or AMD chipsets and have different memory sizes, types, and interfaces. You can buy Asus graphics cards online from Flipkart, Amazon, or the official Asus website.

Gigabyte GPU

A gigabyte graphics card is a device that allows a computer to display high-quality images and videos on a monitor. A gigabyte graphics card has a large amount of memory, usually measured in gigabytes (GB), that can store and process complex graphical data.

A gigabyte graphics card can enhance the performance of games, animations, simulations, and other applications that require intensive graphics processing.

Micro-star international GPU

Micro-Star International (MSI) is a Taiwanese company that specializes in producing high-performance graphics cards for gaming and professional use. MSI graphics cards are known for their innovative cooling solutions, overclocking capabilities, and RGB lighting effects.

MSI graphics cards support various technologies, such as DirectX 12, NVIDIA GeForce RTX, and AMD Radeon RX. MSI graphics cards are compatible with most motherboards and PC cases and come with various features such as fan control, VR support, and warranty service.

Conclusion

In the realm of Graphics Processing units and General-Purpose Computing on Graphics Processing Units, the potential for accelerating various tasks is immense. From scientific simulations to AI training and beyond,

GPUs have reshaped the landscape of high-performance computing. Their parallel processing capabilities, energy efficiency, and versatility continue to drive innovations across diverse industries.

- Also, Read This Article: What is the Difference Ram DDR2, DDR3, DDR4, and DDR5 RAM?

- Also, Read This Article: 4s Lipo Battery Full Details and Problem Solution

- Also, Read This article: How Long Does a Car Battery Last

- Also, Read This article: How long does a 2S LiPo battery ed last

- Also, Read This Article: 6S LiPo battery

- Also, Read This Article: 4s Lipo Battery Full Details and Problem Solution

- Also, Read This Article:- 12V 7Ah Battery Charger Circuit Diagram

- Also, Read This Article:- 3.7Volt Li-ion Battery Charger Full Details & Solution.

- Also, Read This Article:- cr2032 battery

- Also, Read This Article:- When was Bing launched | Is Bing AI Free to use?

FAQ

What are the benefits of the GPU for the computer system?

The GPU, or graphics processing unit, is a specialized chip that handles the complex calculations and rendering of graphics on a computer system. The GPU can benefit the computer system in several ways, such as:

Improving the performance and quality of graphics-intensive applications, such as video games, 3D modeling, video editing, and virtual reality.

Accelerating the computation of parallel tasks, such as machine learning, scientific simulation, and cryptography

Reducing the workload and power consumption of the CPU,

or central processing unit can improve the overall speed and efficiency of the computer system.

What is GPU computing, and how is it applied today?

GPU computing is the process of using a graphics processing unit (GPU) to perform calculations that are usually done by a central processing unit (CPU).

GPUs have thousands of cores that can work in parallel, making them faster and more efficient for some types of tasks.

GPU computing is applied today in various fields, such as scientific research, artificial intelligence, cryptocurrency mining, and more.

What does NVIDIA do?

NVIDIA is a company that specializes in designing and manufacturing graphics processing units (GPUs) for various applications, such as gaming, artificial intelligence, and high-performance computing.

GPUs are devices that can perform complex calculations on large amounts of data, such as images, videos, and 3D models.

Millions of users utilize NVIDIA GPUs to experience realistic and immersive graphics on their desktops, gaming consoles, and mobile devices.

Which GeForce card is the best?

There is no definitive answer to this question, as different GeForce cards have different features, performance, and prices that may suit different needs and budgets.

However, some general factors to consider when comparing GeForce cards are the CUDA cores, the memory size and type, the clock speed, the ray tracing and tensor cores, the power consumption, and the supported technologies.

For example, the RTX 40 series cards have more CUDA cores, memory, ray tracing, and tensor cores than the RTX 30 series cards, but they also cost more and consume more power. The GTX 10 series cards are older and cheaper, but they do not support ray tracing or DLSS.

The best way to find out which GeForce card is best for you is to compare the specifications and benchmarks of different models using online tools or websites.